So, we have discussed what exactly a Community Meetup session at Microsoft Ignite is in Part 1. Please feel free to have a read through to see what we did and how we did it. This entry is to discuss the results of that session at Ignite. During the session multiple groups of people came up with some great talking points on the three main topics covered concerning the Microsoft Bot Framework and Cognitive Services. We discussed what I thought were three of the main points in creating bots using the Microsoft Bot Framework. The three topics were, for the most part, focused around how Microsoft Cognitive Services could help. We discussed user frustration when using bots, what were and were not problems that could be solved with a bot application and lastly how to make bots more inclusive to all people.

We had 10 tables of 6 people and, at least for the first topic, we had recorded responses from twelve distinct teams including one of my favorites “the Wall” team which consisted of people who were sitting in chairs along the wall that banded together to make a discussion group. Some people along the wall chose to join existing tables for discussion. It was great!

Most of the information below is not necessarily my opinion but that of people attending the Microsoft Ignite Community Meetup BRK2388, Bots and Brains.

Bot Frustration

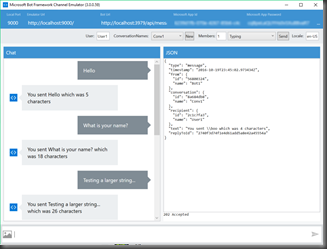

The premise of this section was threefold. How do I prevent frustration? How do I detect frustration? and how do I resolve frustration? All three points should be important considerations when creating a chat bot interface to an application. Before arriving in Orlando I had some pre-conceived notions of how to detect frustration. It was interesting how many different options the group came up with that I had not thought of.

With regards to detecting frustration there were plenty of suggestions. Microsoft Cognitive Services provides a service called the Text Analytics API that lets you detect in a range of 0 to 100% very negative or very positive sentiment. Another team suggested using the Language Understanding Intelligent Service or LUIS to detect curse words indicating frustration. Outside of sentiment and curse words, suggestions were made to look for repeated questions and entities (parameters) indicating frustration at not getting a correct answer. Overall if we consider the three main ideas above I believe we can be fairly competent at detecting most forms of frustration.

Before frustration ever happens, there are things we can do to prevent it from starting. One group suggested using training data based on past feedback to ask leading questions to help get more accurate answers. Some of the suggestions were beautiful in their simplicity. For example provide the option to see a tutorial as we did for a project I worked on called the Accessibility Sport Hub or ASH. The tutorial nicely sets expectations and suggestions on getting the most out of the bot. It also outlines what the bot can, and just as importantly cannot do. One of the responses seems to apply to this category as well as what to do to resolve frustration after it has occurred. Hand the conversation off to a real person. In the case of prevention, as soon as the bot can detect that it may not be able to resolve the inquiry, have a method of handing off the inquiry to a real person. The bot can be the method of collecting information and a real person is used to resolve the problem that might not be addressable by the bot.

Lastly what do we do when things go badly and we have a frustrated or even angry person using our bot. The most often repeated solution here is recognizing when human intervention is required and hand off the bot to a qualified individual for resolution. Another suggestion by one of the “Wall” groups was to provide additional options or help when frustration is encountered. Perhaps they don’t know what the bot is capable of and providing them the option of learning what is available might help. Improving the feedback to the user was also suggested and this can also fall under the heading of prevention.

Humour is something else that can be introduced. Giving the bot a bit of a personality can help build a friendly relationship and perhaps reduce stress levels. One caveat to consider is sometimes humour is not universal and one should be cautious that you don’t introduce more problems than you create. One of the most popular suggestions was to use the “panic button” method of providing a way to ask for help while in the middle of the conversation. If you provide an escape or some way to get back to the start and/or get help it might alleviate some of the frustration being created.

Bot Solutions

What makes a good bot solution? It’s a question that isn’t asked often enough in development. “What is the best way to do this” rather than “I know how I can do this”. So we asked the community meetup what they thought. It was interesting what considerations they came up with when trying to decide what sorts of applications would be best as chat bots.

One of the more interesting considerations is the target age group of the app. As strange as it may seem different generations of people approach how they communicate in different ways. The other one that was interesting was globalization and how bots might be better at dealing with multiple languages than many other solutions.

Once we got past demographics there were suggestions that a solution that might require a more specific answer faster than searching Bing or Google might find a directed bot conversation more useful. Also the ability to handle difficulties interactively would be helpful. A bot would be useful at connecting people to internal data repositories like information on employment, HR and general inquiries would be ideal for a bot.

Under the “not so useful” category, several tables/groups suggested that an “advice” type application might not be so useful as pure chat bots. For example, providing legal or medical advice might have moral and legal barriers where the repercussions of providing improper or incorrect information are significant. For example, we probably don’t want bots handling 911 calls at this time. Although, a hybrid solution where a bot collects basic information and then hands off the session to a human might be reasonable in some cases.

Lots of groups felt that bots are not very helpful for very complex needs. For bots to work, somebody has to write code for the responses and depending on whether or not the path people using the bot need to follow has many branches or not could affect the effectiveness of the bot.

Inclusive Bots

This category turned out to be a bit more difficult than the others. In many ways people feel it’s difficult to relate to the issues at hand. However, once you consider that building an inclusive bot is not about building a bot for specific people but, instead, for all people, you can start to see ways to help in that area. Including everybody does not mean just disabilities but also, culture, language, gender and a whole host of other variables that make people unique.

One of the items that came up is voice. Microsoft nicely announced at Build 2017 the ability for a bot to be available as a Cortana skill basically enabling speech to text and text to speech handling so your bot doesn’t require keyboard or mouse input. That’s a huge leap forward in usability and inclusivity. Other items discussed were using Cognitive Services like the Computer Vision API to discern the age of a user.

Also using images in providing information might help work around language barriers (although the Bot Framework does support many language, it might not cover all of them). Addressing gender differences were also mentioned and here vision can help too by detecting gender using the Computer Vision API.

To Wrap it Up

Privacy was not mentioned and I think we always need to be aware of people’s privacy. When we use various Cognitive Services we should always make sure we have permission where necessary to provide people with the choice.

Overall, it was quite remarkable how many different opinions and ideas came out of our room of just 85 people. Everybody stayed right to the end to make sure they got their voice heard. The OneNote notebook is available to those that attended my session and you can view it too if you wish. Also, join the conversation at at the Microsoft Tech Community!

I hope that everybody got something out the session. Maybe met a like minded person, found some information from myself or others in the room that might help in decision making over bots and AI or just generally enjoyed the break from the traditional session. I thank all the attendees and hope that I have the opportunity to do it again next year.